- AIXBIO

- Posts

- AIXBIO Focus #1 - 07/07/23 - AI & Regulation

AIXBIO Focus #1 - 07/07/23 - AI & Regulation

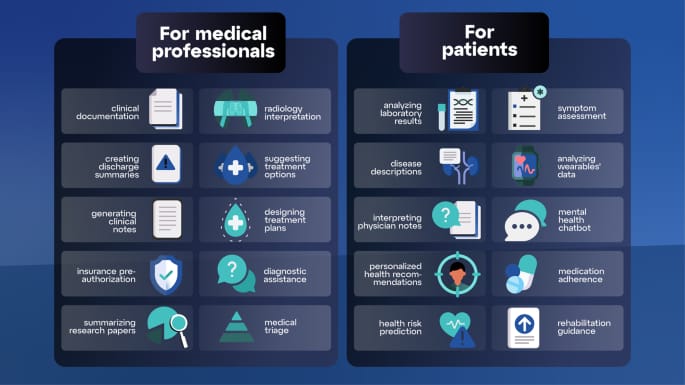

The Urgent Need for Regulatory Oversight of Large Language Models in Healthcare

The rapid development of large language models (LLMs), such as OpenAI's GPT-4 and Google's Bard, has raised important considerations for regulatory oversight in healthcare. LLMs have the potential to be used in various healthcare applications, including clinical documentation, research summarization, and answering patient questions. However, their unique training methods and capabilities require careful regulation, especially when it comes to patient care. The newest version, GPT-4, can read texts on images and analyze their context, which increases the potential risks and benefits of this technology. Regulatory oversight is needed to ensure that LLMs can be used safely by medical professionals without causing harm to patients or compromising their privacy. The FDA has been leading the way in regulating AI-based medical technologies and has adapted its regulatory framework to address AI and machine learning technologies. However, LLMs present new challenges that may require a new regulatory category. LLMs differ from previous deep learning methods in terms of scale, complexity, broad applicability, real-time adaptation, and societal impact. These unique characteristics require a tailored approach to regulation, taking into account challenges such as interpretability, fairness, and unintended consequences. The regulation of LLMs should also address issues related to data privacy and security. Moving forward, regulators should consider creating a new regulatory category for LLMs, providing guidelines for their deployment in healthcare, and ensuring transparency, accountability, and fairness in their use.

FDA Seeks Feedback on Regulating AI and Machine Learning in Drug Development and Manufacturing

The U.S. Food and Drug Administration (FDA) has released a discussion paper requesting feedback from stakeholders in the pharmaceutical industry on how to regulate artificial intelligence (AI) and machine learning (ML) in drug development and manufacturing. AI and ML have already made an impact in these areas, but there are unique regulatory challenges that need to be addressed. The FDA is seeking input on issues such as ensuring compliance with current Good Manufacturing Practice (CGMP) when AI algorithms change manufacturing processes, addressing biases in AI decision-making, and protecting patient data privacy. The FDA highlights potential benefits of AI/ML in drug development and manufacturing, such as creating predictive models to assess a patient's reaction to a drug, optimizing manufacturing processes, monitoring product quality, and detecting adverse events. The agency also outlines three overarching principles for using AI/ML in these fields: human-led governance and transparency, quality and reliability of data, and model development, performance, monitoring, and validation. The FDA is particularly interested in feedback on ensuring the accountability and trustworthiness of complex AI/ML systems, preventing bias and errors in data sources, securing cloud-based manufacturing data, storing data for regulatory compliance, and complying with regulations when ML algorithms adapt processes based on real-time data. Stakeholders are encouraged to provide feedback to the FDA by August 9, 2023, to help shape the agency's regulatory framework for AI and ML in drug development and manufacturing.

The EU's Opportunity to Lead: Establishing a Global Regulatory Framework for AI Advancements

As artificial intelligence (AI) continues to advance at an exponential rate, the European Union (EU) has the opportunity to take the lead in establishing a global regulatory framework for AI. The EU’s neutrality and strong regulatory tradition make it well-suited to shape responsible AI development and use. The COVID-19 pandemic has demonstrated both the benefits and risks of technology, highlighting the need for responsible use and regulation. AI has the potential to revolutionize fields such as medicine, climate change, and agriculture, but it also poses risks such as the spread of misinformation and job displacement. AI models are developing rapidly, and there is a possibility of recursively self-improving AIs in the near future. Concerns about the potential risks of AI development are widespread, with experts expressing concerns about catastrophic AI systems and the challenge of ensuring that AI systems align with human values. The EU has proposed legislation, the AI Act, which is currently in the final stages of the legislative process. However, the EU’s policy intervention is limited to regulating AI use within its own borders. To establish itself as a crucial player in AI diplomacy, the EU should expand its foreign policy to include AI regulation. The EU can lead a global effort to mitigate and reduce the risks associated with AI by coordinating efforts to invest in AI safety and alignment research, promoting transparency, and fostering global consensus on regulations. The EU’s neutrality and credibility, along with its regulatory creativity and speed, make it well-positioned to kickstart this process. The primary objective should be to establish a regulatory framework that addresses the range of risks associated with AI.

European Companies Express Concerns over EU's AI Regulations in Open Letter

Over 150 executives from European companies, including Renault, Heineken, Airbus, and Siemens, have signed an open letter criticizing the European Union's (EU) recently approved artificial intelligence (AI) regulations. The executives argue that the regulations outlined in the AI Act could "jeopardize Europe's competitiveness and technological sovereignty." The AI Act, which was greenlit by the European Parliament on June 14th, imposes strict rules on generative AI models, requiring providers of such models to register their products with the EU, undergo risk assessments, and meet transparency requirements. The signatories of the letter claim that these rules could lead to disproportionate compliance costs and liability risks, potentially driving AI providers out of the European market. They argue that the regulations are too extreme and risk hindering Europe's technological ambitions. The companies are calling for EU lawmakers to adopt a more flexible and risk-based approach to regulating AI. They also suggest the establishment of a regulatory body of experts within the AI industry to monitor the application of the AI Act. Some critics of the open letter, including Dragoș Tudorache, a Member of the European Parliament involved in developing the AI Act, claim that the complaints are driven by a few companies and emphasize that the legislation provides industry-led processes, transparency requirements, and a light regulatory regime.

Implications of Data Privacy Regulations and AI Governance in Europe, the UK, and US

This article discusses data privacy regulations and AI regulation in Europe, the UK, and the US. It highlights the concerns with AI and privacy, including input concerns related to the use of large datasets that can include personal information, and output concerns related to the use of AI to arrive at certain conclusions. In Europe, the General Data Protection Regulation (GDPR) is the comprehensive privacy law that applies to all industries and all personal data, including automated processing of data. The GDPR requires informing individuals about how their data will be used and conducting data protection impact assessments. The European Commission has proposed new rules and actions to make Europe a global hub for trustworthy AI. In the UK, the AI Act, which no longer applies to the UK, still has extraterritorial reach and UK businesses need to maintain data protection equivalence with the EU. The UK government has published a white paper outlining its framework and approach to the regulation of AI, which demonstrates divergence from the EU's approach. The US has a myriad of privacy laws based on jurisdiction and sector, but specific AI guidance is expected. The Federal Trade Commission (FTC) is the enforcement authority for data privacy issues in the US, and it has issued reports and enforcement actions related to AI and privacy issues. Additionally, the article mentions the California Consumer Privacy Act (CCPA) and similar privacy regulations enacted in Virginia, Colorado, and Connecticut. The article concludes by emphasizing the need for AI projects to comply with regulations and safeguard personal data, and the importance of understanding how AI systems use and disclose information.

Japan Embraces Light Touch AI Regulation Amid Global Debate on Approaches

Japan is proposing a light-touch approach to regulating artificial intelligence (AI) in order to quickly take advantage of the potential benefits of the technology. The Japanese government aims to address the challenges caused by its declining population through the use of AI. While Japan joins the US and the UK in favoring a hands-off stance on AI development, businesses are likely to follow the stricter rules proposed by the EU to ensure access to the lucrative European market. The EU has developed a comprehensive set of regulations through the EU AI Act, which includes requirements for AI developers to declare training data and minimize illegal or harmful content generation. Some EU companies are concerned that these regulations will put them at a disadvantage compared to non-EU companies. Japan is considering aligning its regulatory approach with that of the US, which focuses on economic growth and aims to capitalize on its expertise in chip manufacturing. While the competition to become the global standard for AI regulation is intensifying, experts argue that the EU's approach is likely to become the standard adopted by major producers to ensure access to the European market. They believe that innovation can still occur in a regulated environment and that regulation is not necessarily an obstacle to innovation or profits. They cite the example of the car industry, which eventually found innovative ways to reduce carbon emissions despite resisting environmental regulations for decades.

China's 'Heavy-Handed' Regulation Jeopardizes Progress in AI Race, Falling Further Behind US

China risks falling further behind the United States in the race to develop artificial intelligence (AI) due to "heavy-handed" regulation, according to experts. Despite China's impressive display of innovation at the World Artificial Intelligence Conference in Shanghai, it still lags behind its biggest competitor, the US. China has made significant strides in certain areas, such as military and cybersecurity, but overall, experts agree that it is slightly behind the US in AI development. There are three key ingredients needed for successful AI development: high-quality data, top-notch expertise, and advanced software and hardware. While China has more data than Western countries in certain fields, it has limitations due to less information available in Chinese. In terms of expertise, there is brilliant Chinese talent, but recent developments like Microsoft moving its AI centers away from China may hinder access to the best people in the future. China's hardware and software development is hampered due to export regulations imposed by the US, which restrict China's access to high-quality chips and technology. However, China does have advantages in areas like data labelling, where better access to affordable labor gives it an edge. The potential drawback for China is heavy regulation, as experts believe that China is likely to be more heavily regulated than other countries. This may hold the industry back and create uncertainty for tech companies. Overall, China is considered a key player in the AI race, but it needs to address regulatory issues to further its development and remain competitive with the US.

India Can Find a Middle Ground by Combining EU and US Models of AI Regulation

The article discusses the need for regulation of artificial intelligence (AI) and suggests that India can blend the EU and US models of AI regulation to create an effective regulatory framework. The author acknowledges that while regulation of AI is difficult, it is necessary due to its capacity to influence society and outcomes in various spheres. The challenge is to ensure that rules do not stifle innovation. The author argues that regulation provides a cloak of legitimacy to the industry and helps quell uncertainties surrounding AI's societal impact. The article highlights the challenges involved in regulating AI, including the rapidly evolving nature of AI technology, the lack of a universally accepted definition of AI, the diverse nature of AI that requires different regulatory approaches, and the need for international consensus on regulation. The EU and the US models of AI regulation are discussed, with the EU taking a proactive approach to protect individual rights and data privacy, while the US favors a decentralized approach to avoid overregulation and promote innovation. The author suggests that India should consider a balanced approach to AI regulation by learning from both the EU and US models. India needs a robust framework that safeguards individual rights and data protection without impeding AI development, similar to the EU model. At the same time, aspects of the decentralized US model can be adopted to address specific sector needs and encourage innovation while avoiding overregulation. In conclusion, the article emphasizes the importance of AI regulation in mitigating risks and ensuring responsible use of AI technology. By blending the EU and US models of regulation, India can create a regulatory framework that promotes innovation while safeguarding individual rights and data privacy.

AI & Law: Examining Global Trends and Navigating Regulation Reforms in Canada

The European Union (EU) has approved the Artificial Intelligence Act (EU AI Act), which aims to regulate the use of artificial intelligence (AI) and address potential risks associated with AI technology. The EU AI Act takes a risk-based approach and imposes restrictions on high-risk technologies, such as recommendation algorithms, while also requiring organizations to label AI-generated content. This legislation has the potential to set global standards for AI regulation. Following the EU's lead, several countries, including Canada, have announced plans to develop their own AI regulatory frameworks. In Canada, the federal government has shown dedication to addressing the concerns and risks associated with AI. Quebec's Act to modernize legislative provisions regarding the protection of personal information (Law 25) introduced amendments to the privacy regime governing the collection, use, and communication of personal information in the public and private sectors. Law 25 imposes obligations on organizations, including designating a person in charge of protecting personal information and promptly notifying the appropriate authorities in the event of a confidentiality incident. Additionally, Canada is undergoing a significant transformation in its federal privacy regulatory landscape with the introduction of the Digital Charter Implementation Act, 2022 (Bill C-27). If adopted, Bill C-27 will establish three new pieces of legislation, including the Consumer Privacy Protection Act, the Personal Information and Data Protection Tribunal Act, and the Artificial Intelligence and Data Act. These measures will directly impact businesses that develop, deploy, or use AI systems. To comply with these regulatory frameworks and mitigate the associated risks, businesses will need to establish robust internal AI governance structures. The speed of AI system development and the increasing number of commercial applications necessitate a careful consideration of the potential risks. Organizations can adopt measures to effectively mitigate these risks. McCarthy Tétrault, a Canadian law firm, offers assistance to businesses in formulating strategies to mitigate commercial and legal risks associated with AI systems.