- AIXBIO

- Posts

- AIXBIO Weekly #12 - Sep/25/2023

AIXBIO Weekly #12 - Sep/25/2023

AlphaMissense

Unraveling Genetic Mysteries: DeepMind's AI-Powered Exploration of Mutations

DeepMind has introduced a new AI tool, AlphaMissense, which classifies the effects of 71 million 'missense' mutations. These mutations can influence the function of human proteins and, in certain instances, lead to diseases such as cystic fibrosis, sickle-cell anaemia, and cancer. The AlphaMissense catalogue was developed using the AI model, which classified 89% of all possible missense variants as either likely pathogenic or benign. Astonishingly, only 0.1% have been verified by human experts.

AlphaMissense is an extension of DeepMind's breakthrough model, AlphaFold, which predicted structures for nearly all proteins known to science. The new model predicts the pathogenicity of missense variants altering individual amino acids of proteins. It leverages databases of related protein sequences and the structural context of variants to produce a score, estimating the likelihood of a variant being pathogenic.

DeepMind's advancements in understanding genetic mutations can revolutionize the field of genomics. By accurately predicting the effects of mutations, researchers can gain insights into the causes of various diseases, accelerating the development of treatments and therapies. While the tool offers valuable insights, over-reliance on AI predictions without experimental validation could lead to potential misconceptions or oversights in understanding genetic mutations.

#DeepMind #AlphaMissense #GeneticDisease #AIinHealthcare #DNASequencing #Biosecurity #RareDiseases

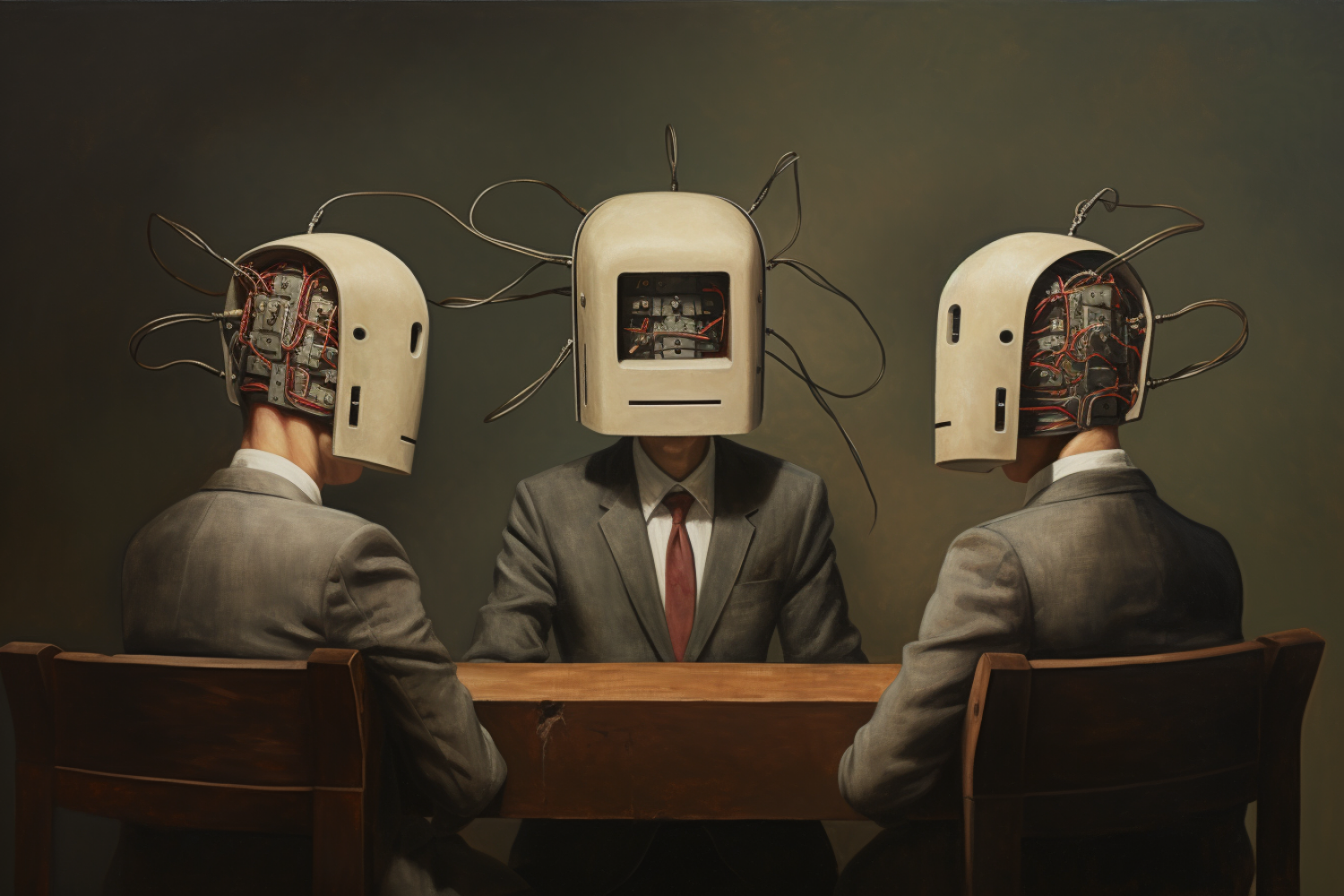

MIT's Multi-AI Strategy: Elevating Language Model Accuracy & Reasoning

MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) has unveiled a groundbreaking strategy that harnesses multiple AI systems to collaboratively debate and converge on the most accurate answer to a given query. This innovative method is designed to bolster the factual accuracy and decision-making capabilities of large language models (LLMs). The core challenge with LLMs is the inconsistency in their responses, which can lead to potential inaccuracies. MIT's approach allows each AI agent to evaluate the responses of its peers, using this collective feedback to refine its own answer. This iterative process culminates in a consensus-based output, reminiscent of human group discussions.

Beyond language models, this strategy has potential applications across various modalities, including speech, video, and text. While promising, the researchers acknowledge challenges, such as processing extensive contexts and refining critique abilities. The team envisions further exploration into the complexities of human discussions to enhance LLMs.

This multi-AI collaborative approach signifies a paradigm shift in AI language modeling. By mimicking human-like deliberation processes, AI models can achieve enhanced accuracy and reasoning. Such advancements can revolutionize AI applications in various sectors, ensuring more reliable and consistent outputs.

Partnerships

OpenFold AI Consortium Expands with UCB, NVIDIA, and Valence Labs: Pioneering the Future of Drug Discovery

OpenFold, a non-profit AI research consortium, has announced the inclusion of three new industry partners: UCB, NVIDIA, and Valence Labs. With a primary goal to develop free and open-source software tools tailored for biology and drug discovery, OpenFold is set to benefit immensely from this collaboration. UCB and Valence Labs will enhance OpenFold's presence in the pharmaceutical and TechBio sectors. Simultaneously, NVIDIA, renowned for its AI computing prowess, is expected to fast-track the consortium's technical advancements. The consortium, established in February 2022, operates under the Open Molecular Software Foundation. It aspires to democratize access to powerful AI systems, catering to a diverse audience from academics to pharmaceutical giants.

#OpenFold #AIResearch #DrugDiscovery #UCB #NVIDIA #ValenceLabs #TechBio #ProteinPrediction #OpenSourceAI

Google & DoD's AI Microscope: A New Vision in Cancer Detection

Google, in collaboration with the U.S. Department of Defense, has developed an AI-powered Augmented Reality Microscope (ARM) designed to assist pathologists in diagnosing cancer. This groundbreaking tool, still in its early stages, can outline the location and severity of cancer in tissue samples.

The ARM is an extension of traditional microscopes, equipped with AI models that highlight cancerous regions on a glass slide. The AI provides a visual representation, outlining cancer in bright green, and also generates a heat map indicating the cancer's boundaries. This technology aims to support pathologists, especially in remote areas, by serving as a second opinion, ensuring more accurate diagnoses.

Despite its potential, the ARM is not intended to replace digital pathology systems. Instead, it offers an alternative, especially for health organizations that find digitization challenging due to storage and infrastructure costs. The ARM's design ensures that the physical microscope remains central to the pathologists' process, integrating AI without disrupting established workflows.

The Defense Innovation Unit (DIU) has been conducting initial research to test ARM's efficacy. While the results are promising, rigorous testing is still required before its widespread clinical application. The DIU aims to make ARM available to all government users and is considering collaboration with regulators for its broader distribution.

The integration of AI in pathology can significantly enhance the accuracy of cancer diagnoses. By aiding pathologists in their assessments, ARM can potentially reduce diagnostic errors, leading to better patient outcomes.

Unmasking the Flaws: The Need for Red-Teaming in Large Language Models

Red-teaming is an evaluation method to identify vulnerabilities in LLMs that might lead to undesirable behaviors.

Large language models (LLMs), while adept at generating realistic text, often produce undesirable content, ranging from misinformation to outright bias.

The origins of red-teaming trace back to military wargames, but in the context of LLMs, it's about crafting prompts that would make the model generate harmful content.

The goal is clear: to simulate all possible scenarios that could lead to malevolent outcomes and evaluate the model's behavior in each. Given the challenges, there's a call for multi-organization collaboration, including academic, industrial, and government entities. Past research findings indicate that there's no clear trend with scaling model size for attack success rate. However, there's a trade-off between a model being helpful and being harmless.

AI-Powered Voice Solutions: Suki & MEDITECH's Ambitious Step to Alleviate Clinical Documentation Burdens

Suki, a provider in voice AI for healthcare, has announced a collaboration with MEDITECH, a top electronic health record vendor. The partnership aims to integrate Suki's flagship product, the Suki Assistant, into MEDITECH Expanse, marking the first EHR designed for the native web. The Suki Assistant offers a blend of ambient note generation, dictation, and commands, providing clinicians with a flexible clinical workflow.MEDITECH Expanse users can now easily reference information while using Suki and seamlessly transfer the completed note back to the EHR.

The integration of AI voice technology into EHR systems is a significant advancement in healthcare. By reducing the administrative burden, clinicians can focus more on patient care, potentially reducing burnout rates. This partnership between Suki and MEDITECH represents a step forward in leveraging AI to address fundamental healthcare challenges. While the technology promises efficiency, there might be concerns about data privacy and the accuracy of AI-generated notes. Over-reliance on AI might also lead to potential oversights in patient care.

#AIinHealthcare #MEDITECH #SukiAssistant #ClinicalDocumentation #EHRIntegration

Investment

Generate:Biomedicines Secures $273M in Series C: Pioneering AI-Driven Therapeutics

Generate:Biomedicines, a clinical-stage biotherapeutics company, has announced its acquisition of a $273 million in Series C financing. This funding is set to be a catalyst in the advancement of their generative biology platform, which is powered by machine learning. The financing round was a magnet for new investors, attracting giants like Amgen and NVIDIA’s venture capital arm, NVentures. The company's vision is ambitious, with plans to leverage this funding to further its robust pipeline of 17 existing programs and kickstart approximately 10 new ones annually. One of the company's notable achievements includes the initiation of its first-in-human trial for GB-0669, a monoclonal antibody targeting the spike protein in SARS-CoV-2. Furthermore, they are on track to file a Clinical Trial Application by early Q4 2023 for its anti-TSLP monoclonal antibody, a potential game-changer in asthma treatment.

Medeloop Raises $8M: Pioneering a New Era in Health Research with AI

Medeloop, a healthtech startup from Stanford University, has secured $8M in seed funding to pioneer an innovative platform for early-stage clinical research. This platform aims to transform disease research through funding facilitation, data synthesis, AI-driven analysis, and user-friendly no-code tools. The funding round was oversubscribed and led by General Catalyst, with participation from various notable entities. Medeloop's AI-driven platform is designed to expedite the clinical research process by integrating, harmonizing, and analyzing vast health data. The platform's goal is to transform a five-year health research journey into a five-week discovery sprint.

AI Implementation

Amidst the 'ChatGPT moment', AIM Research delves into the true costs & benefits of generative AI. While the tech promises efficiency, Gartner predicts 50% of enterprises may abandon large-scale AI by 2028. Importance of identifying quantifiable business benefits.

Start with a Proof of Concept to gauge potential impacts & Be aware of scaling up costs.The future of AI seems to be gravitating towards agent technology. Evaluate both API and open-source options for AI integration. Consider factors like speed to market, customization, and regulatory requirements when evaluating AI integration options

Politics, Ethics, & Regulation

AI Skeptics

A recent poll shared with Vox claims that 63% of Americans are against the development of superintelligent AI. This skepticism arises despite major AI companies, like OpenAI, racing to build such technology. The poll, conducted by the AI Policy Institute and YouGov, surveyed over a thousand Americans, indicating a significant portion believes regulations should prevent AI superintelligence. OpenAI's mission, as stated on their website, is to ensure that artificial general intelligence (AGI) benefits all of humanity. However, the public debate often revolves around controlling such intelligence rather than questioning its existence.

The Time article draws parallels between the challenges of governing nuclear technology post-WWII and the current concerns surrounding advanced artificial intelligence (AGI). Just as international cooperation was crucial in managing nuclear risks, Time claims there's a growing consensus that a similar approach is needed for AGI. Time also Proposes a catchy acronnym, Multilateral AGI Consortium (MAGIC) aim to centralize AGI development, ensuring safety and global benefit. The article emphasizes that leaving AGI development to private entities is a gamble, drawing comparisons to the unchecked growth of nuclear weapons. Both articles highlight the need for democratic input and global oversight in the development and deployment of advanced AI technologies.

AI Development: A Race to the Bottom? MIT Professor Max Tegmark Sounds the Alarm

The article from The Guardian discusses the concerns raised by Max Tegmark, a co-founder of the Future of Life Institute, regarding the rapid development of powerful artificial intelligence systems. Tegmark had previously organized an open letter calling for a six-month pause in the development of such AI systems. Despite gaining support from influential figures like Elon Musk and Steve Wozniak, the letter failed to halt the advancements in AI. Tegmark attributes this to the intense competition among tech companies, describing it as a "race to the bottom." The article also touches on the potential risks of AI, from immediate threats like deepfake videos to existential risks posed by super-intelligent AIs. Tegmark emphasizes the need for a unified global response and urgent government intervention.

AI's Rapid Advancement: A Call for Caution and Oversight

6 months post AI pause letter, experts urge for AI safety & oversight. Rapid AI growth sparks global concern. Calls for transparent & ethical AI development grow louder.

Stanford Ethicists Advocate for Ethical Pediatric Data Use in AI Medical Research

Stanford ethicists propose ACCEPT-AI, a framework for ethical use of pediatric data in AI medical research. They emphasize age-related algorithmic bias & call for age transparency. Concerns over long-term consent & data retrieval highlighted.

AI's Rapid Evolution Outpaces Regulation: A Global Call to Action : urging UN general assembly to create international regulatory system

Artificial intelligence (AI) is advancing at a pace that regulators are struggling to match, warns the UK's Deputy Prime Minister, Oliver Dowden. In a speech at the UN General Assembly, Dowden highlights the urgency of addressing the regulatory vacuum surrounding AI, which is evolving faster than anticipated by many policymakers. He suggests the idea of an international regulatory framework, a topic the UK is eager to spotlight during its AI safety summit at Bletchley Park in November. Dowden's remarks underscore the global race in AI, where entities, be it nations or corporations, are pushing technological boundaries. Historically, technological advancements have been met with post-facto regulations. However, Dowden emphasizes that in the case of AI, regulatory frameworks must evolve concurrently with technological advancements.

Senate Deliberates on AI's National Security Implications: A Deep Dive into the Future of AI

The Senate convened to discuss the national security implications of artificial intelligence (AI). The session, titled "Senate hearing on national security implications of AI — 09/19/23," featured experts from various fields, including academia and the tech industry. The discussion revolved around the transformative power of AI in diverse areas such as drug discovery, creative arts, and software programming. However, there were also concerns about the potential misuse of AI by foreign governments, especially in the military and intelligence domains.

The proliferation of AI technologies has lowered the barrier of entry for foreign governments to apply these tools for their purposes. The public release of technical details from trained AI models can be advantageous to foreign governments, just as it is to startups and researchers. The discussion also touched upon the current posture of the U.S.'s strategic rival, the People's Republic of China, in terms of AI development.

One of the significant concerns raised was the potential for AI to exacerbate the threat of foreign malign influence. The ability for foreign actors to generate hyper-realistic images, audio, and videos could make it challenging for Americans to navigate the complex media environment. There were also discussions about the potential disruptions, ethical dilemmas, and dangers posed by AI technology.

The experts emphasized the need for the U.S. to harness and govern AI technologies effectively to avoid past mistakes made with other global-scale technologies like social media. The session concluded with a call for governments, especially democracies, to work together to set common AI standards and governance models.

.webp)